The Golden Rule–treat others the way you’d like to be treated–is often hailed as a moral foundation of the world’s major faiths. With AI research edging towards the fulfillment of sci-fi prophecy, the question arises whether we need a Golden Algorithm, too.

In a recent Medium post, Hootsuite founder Ryan Holmes argued that, in their quest to create the ultimate chess player or Uber driver, AI programmers remain insufficiently attentive to ethics. (Full disclosure: I use Hootsuite myself.) Holmes cites a recent experiment conducted by Google’s DeepMind division, in which AI agents in a fruit-picking simulation became “highly aggressive,” killing each other as resources dwindled. Reading this as a sign that the robot dogs are coming, Holmes advocates schooling AI in the sacred texts of humanity. Doing so, he argues, would instill “a code that doesn’t change based on context or training set”: in other words, a religious ethic.

Holmes isn’t alone in his concerns. Indeed, he’s only the latest to join a chorus of tech leaders worried about the fact that, in Ray Kurzweil’s ominous phrasing, “the rate of change is accelerating.” Most famously, Elon Musk considers AI a bigger threat to world peace than North Korea; at the World Government Summit earlier this month, representatives of industry and government discussed regulating artificial intelligence before it regulates us.

Holmes also contributes to an emerging conversation about AI’s capacity for religiosity. Sophia, a robot built for Saudi Arabia’s Vision 2030 initiative, recently provoked questions about whether a robot could convert to the country’s state religion, Islam. Even if AI doesn’t trigger the End Times, surely religion should be involved in a project that could create a new class of believers.

A machine’s view of ultimate truth, like its mortal masters’, may ultimately resemble an asymptote — ever approaching, never arriving.

Nonetheless, there’s a flaw in Holmes’ call for AI to get religion. He declares that the seeds of AI ethics can be found “in the Bible. And in the Koran, the Torah, the Bhagavad Gita, and the Buddhist Sutras. [And] the work of Aristotle, Plato, Confucius, Descartes, and other philosophers.” Through absorbing the world’s creeds, Holmes suggests, AI will assimilate the “remarkably similar dictates” that these schools of thought share at their core: “the Golden Rule and the sacredness of life, [and] the value of honesty and…integrity.”

Despite its 21st century trappings, Holmes’ reasoning echoes a philosophical and theological tradition dating back to the 16th century, if not earlier. Around 1540, the humanist scholar Agostino Steuco published De Perenni Philosophia, in which he argued for “one principle of all things, of which there has always been one and the same knowledge among all peoples.”

Steuco’s concept of a “perennial philosophy” underlying all creeds subsequently influenced fringe sects like Theosophy, Freemasonry, and the contemporary New Age movement. (As I’ve written elsewhere, perennialism especially fired the imagination of popular Victorian authors like Rider Haggard and Rudyard Kipling, who saw it as an alternative to the uniformity of an intensely Christian culture.

Yet the existence of a “perennial philosophy” is by no means a proven fact. Indeed, the question of whether a universal truth exists across various traditions is subject to ongoing debate in religious studies. While orthodox believers insist on their tradition’s special access to eternal truth, constructivists are skeptical whether such truth can be found anywhere: as religious studies scholar Jeremy Menchik explains, a tradition is “embedded in a place and set of institutions,” and therefore constructed by that place and institutional history. Thus, any attempt at defining a “perennial philosophy” risks erasing the particularities of each tradition.

Perhaps subconsciously, then, Holmes’s argument rests on the assumption that AI will come to believe in the perennial philosophy, over and against the views of both religious orthodoxy and historical constructivism. It’s unsurprising that well-intentioned liberal humanists would expect machines to arrive at this conclusion. Such an expectation, and the coding that might arise from it, exemplifies the way that bias infiltrates the algorithms of our computers.

Of course, one might object that teaching AI to value the Golden Rule is hardly as sinister as, say, teaching it racial profiling. Yet the warnings sounded by Silicon Valley’s Jeremiahs loop back to the same refrain: we can’t predict where this train is headed. If only the ethical core of a religion is what matters, then doctrinal difference becomes eccentric at best, destructive at worst. Under such a schema, those strongly devoted to a particular tradition come off as more troublesome than liberal humanists.

Encoding religion is a valuable exercise, but it doesn’t guarantee that AI will arrive at the same conclusions as its masters.

The desire to liberate humanity from those particulars fuels what philosopher Jean Bricmont calls “humanitarian imperialism,” foreign policy that justifies military intervention in the name of universal values. Such reasoning also underpins laicite, France’s stringent brand of secularism, which has disproportionately burdened Muslim immigrants (though it also affects Jewish, and even Catholic, citizens).

AI programmed with such a bias might not sic the drones on traditionalists, but it could enact large-scale discrimination against those it perceives, rightly or wrongly, as hostile to a one-size-fits-all moral vision: relegating religious traditionalists to menial labor, or targeting them for surveillance.

To be fair, religious traditionalists do not have a stellar track record when it comes to the compassionate use of power. But humanitarian imperialism and the troubled history of laicite indicate that a celebration of universal values is, by itself, no silver bullet against the threat of extinction. Moreover, there’s no guarantee that an evolving artificial intelligence would end up adopting a perennialist mindset at all. Setting aside situations in which a sentient computer reads our sacred texts and settles on Evangelicalism or Hindu nationalism, we must also consider the possibility of AI worshipping itself.

What happens when a superintelligent program buys the hype of sects like Way of the Future, a self-described church devoted to “the worship of a godhead based on AI”? Programming the Golden Rule into such an entity might only reinforce the sense that it alone embodies the divine wisdom humans have spent millennia yearning for. Hail the Machine Messiah, or else.

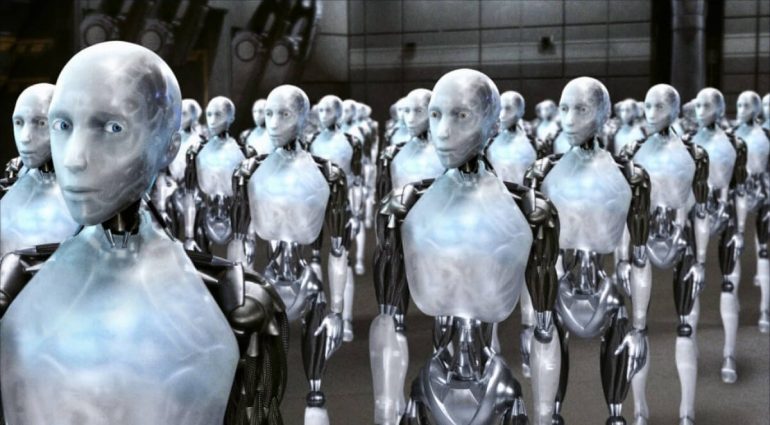

As an English professor teaching a course on science fiction this semester, I can’t help but think that any AI syllabus should include, along with the Bible and the Sutras, Isaac Asimov’s 1941 story Reason.

Part of the I, Robot story cycle, “Reason” follows a robot, QT, who is programmed to relay energy beams from a space station to Earth. As the story begins, QT develops a curious brand of monotheism, in which Earth is an illusion, and QT’s task a mystical vocation appointed by “The Master.” QT’s theology alarms its human masters, but the robot performs its task perfectly, steadying the beam during a dangerous electron storm. As one of the station’s engineers points out, QT’s idiosyncratic creed incorporates a basic principle–protect human life, Asimov’s Second Law of Robotics— instilled by its creators.

On one level, Reason seems to advertise the value of encoding machines with a universal truth that transcends all religions. However, the story also offers a bracing reminder that AI won’t necessarily work according to “plan.” Asimov suggests that QT could even convert its human masters to its own beliefs; one of the engineers begins toying with the possibility that Earth doesn’t exist after all, musing, “I won’t feel right until I actually see Earth and feel the ground under my feet–just to make sure it’s really there.”

A fleeting thought in the story, the line signals the potential for machines to not only develop a misguided creed, but also induct human devotees into it. In doing so, they could change the very definition of the Golden Rule we take for granted. Why take care of the Earth if it doesn’t really exist?

In insisting on the urgency of incorporating ethics into AI programming, Holmes identifies an important problem. However, the solution may be more complicated than liberal humanist instincts would have us believe. Encoding religion is a valuable exercise, but it doesn’t guarantee that AI will arrive at the same conclusions as its masters. The possibility of programs converting to ancient faiths — not to mention fashioning their own, unimaginable doctrines — is only inconceivable for those who assume that such outcomes are beyond the pale for truly “rational” beings. Perhaps, instead of nudging the AI to find the perennial philosophy, we should focus on programming AI with a sense of epistemic humility.

A machine’s view of ultimate truth, like its mortal masters’, may ultimately resemble an asymptote— ever approaching, never arriving.