The race to build useful AI is heating up. Headlines about how AI could change the world have given way to two simple questions: how does it deliver value, and how does it scale?

For enterprises in particular, AI use is expected to grow exponentially: a new study found that 80 percent of enterprises will use GenAI in some way by 2026. Further, enterprise spending on AI tooling is expected to quadruple, from $40 billion in 2024 to $151 billion by 2027.

However, deriving value from these investments requires solutions that meet the scale, compliance, security, and usability requirements of complex enterprises.

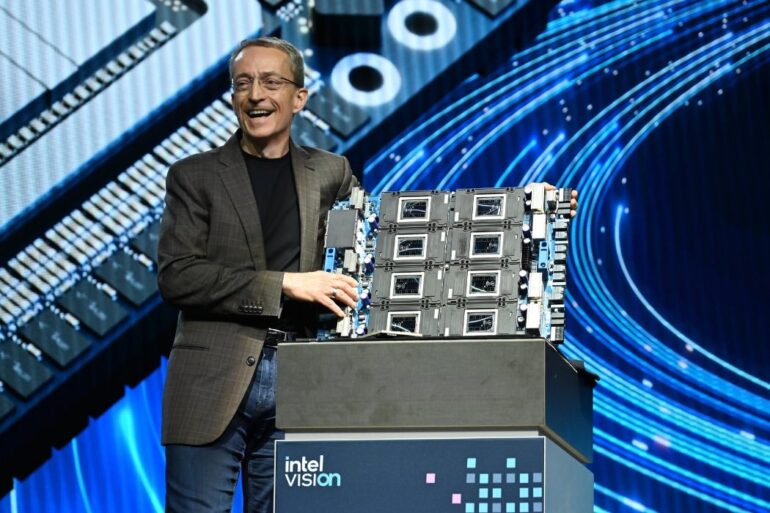

Following Intel’s CEO keynote and announcement at the company’s Vision conference of more energy-efficient processing chips and an open AI app ecosystem, Asma Aziz, Canada Marketing Director at Intel, spoke with BetaKit about why the company believes the path to enterprise AI adoption is through open ecosystems rather than walled gardens.

What does enterprise-grade AI look like?

The popular conception of AI has arguably been fuelled by ChatGPT. However, enterprises are playing a different game.

“Enterprises are all working to integrate generative AI (GenAI) in their businesses mostly to streamline and optimize operations and offer new services to customers,” said Aziz.

“We want people to work collaboratively to maximize the potential of applied AI.”

Asma Aziz

That goal is nothing new—enterprises grow by deepening customer relationships and finding internal efficiencies. What’s new is the barriers large businesses face when trying to use AI. First up is a limited opportunity to customize. Enterprises are large beasts with many existing tools. But current AI solutions either require large implementations (with significant cost) or lightweight tools that are difficult to customize.

Second is the issue of transparency and trust. In particular, enterprises must ensure their AI tools don’t hallucinate (that is: present incorrect or misleading information as fact) and that all decision-making is referenceable (versus a black box). Enterprises also must ensure privacy is preserved. During the announcement at the Vision conference, Sachin Katti, Senior Vice President and General Manager of Intel Network and Edge Group (NEX), said that data is a company’s “most important asset.” Any AI must safeguard data from external actors and follow internal permission structures.

Of course, all of this needs to come as quickly and affordably as possible. Right now, AI solutions are incredibly expensive at enterprise scale and could take months (or even longer) to successfully deploy in any meaningful use case.

“Businesses need solutions that can be implemented quickly on enterprise infrastructure, produce context-aware results using proprietary data and models, and offer privacy, security, reliability and manageability,” said Aziz.

Open versus closed

Each enterprise is its own web of personalities, tools, physical infrastructure, and leadership perspectives. AI tooling only amplifies this complexity.

If the goal is enterprise usability of AI in a way that delivers more value to the organization and its customers, Intel believes the only way forward is through an open ecosystem. Aziz likens this to the “inflection point” experienced a decade ago in the open-source movement in software.

“Having an open and modular, more valuable and open ecosystem drives transparency and enables choice, which generates trust,” said Aziz. “We don’t want to create a single source of ‘truth’ where everyone is relying on what we dictate or consider as ‘correct.’ We want people to work collaboratively to maximize the potential of applied AI.”

Aziz also noted that open ecosystems are often more secure, since a closed ecosystem means a single point of failure for “breaches, leaks, and cyberattacks in general.”

Intel is working to build that open ecosystem, announcing a series of hardware and software solutions that allow third-party developers to build AI applications for the enterprise. This includes more power-efficient chips to design AI models and a new compute ecosystem for developers. The company is also partnering with other enterprises, including Amazon Web Services, Lenovo, SAP, and Red Hat, among others to build out the infrastructure.

This open ecosystem will enable a second element: modularity. It’s not just about allowing multiple members to build solutions, it’s about using a common framework and reference language so enterprises can take the pieces they need to fill their complex use cases.

“We believe that now is the time for the industry to coalesce around building an open platform for Enterprise AI where components can change without their GenAI application breaking,” said Aziz. “What Intel is trying to do is bring AI everywhere in a connected way with all the industry. We believe AI will empower people and this is what we are trying to convey at Intel Vision.”

For more content and news related to Intel’s AI solution and latest announcements, visit the Intel Newsroom.